Learn how to install Apache Kafka on CentOS 8 with this step-by-step guide. Covering prerequisites, installation commands, and configuration tips, you’ll have Kafka up and running in no time. #centlinux #linux #kafka

Table of Contents

What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform used for building real-time data pipelines and streaming applications. Initially developed by LinkedIn and later open-sourced under the Apache Software Foundation, Kafka is designed to handle high-throughput, low-latency data processing.

Key Features of Apache Kafka

- High Throughput: Kafka is capable of handling millions of messages per second, making it suitable for large-scale data applications.

- Scalability: It can be easily scaled horizontally by adding more brokers to distribute the load and ensure high availability.

- Durability: Kafka persists messages on disk and replicates them across multiple brokers to ensure data durability and fault tolerance.

- Fault Tolerance: The system is designed to be highly resilient, with the ability to recover from node failures seamlessly.

- Real-Time Processing: Kafka allows real-time processing of streams of data, which is crucial for applications needing instant insights and actions.

- Decoupling of Systems: Kafka decouples data producers from consumers, enabling independent scaling and evolution of different parts of a data architecture.

- Multiple Consumers: A single stream of data can be consumed by multiple applications, allowing for versatile data processing pipelines.

Core Concepts

- Producers: Applications that send data to Kafka topics.

- Consumers: Applications that read data from Kafka topics.

- Brokers: Kafka servers that store data and serve client requests.

- Topics: Categories or feeds to which records are published.

- Partitions: Subdivisions of topics to allow parallel processing and scalability.

- Replicas: Copies of partitions distributed across brokers to ensure fault tolerance.

Common Use Cases

- Log Aggregation: Collecting and processing logs from various sources in a centralized manner.

- Real-Time Analytics: Processing streams of data in real-time for instant insights and actions.

- Data Integration: Facilitating the integration of different data systems by streaming data between them.

- Event Sourcing: Capturing changes in the state of applications as a sequence of events.

Apache Kafka’s ability to handle large-scale, real-time data streams with high reliability makes it a popular choice for various industries, including finance, telecommunications, retail, and more. Its robust architecture and extensive ecosystem of tools and connectors make it a versatile and powerful platform for modern data-driven applications.

Apache Flink vs Kafka

Apache Flink and Apache Kafka are both powerful tools for handling large-scale data, but they serve different purposes and are often used together in a complementary manner. Here’s a comparison of the two:

Apache Kafka

Purpose: Kafka is a distributed event streaming platform designed to handle high-throughput, real-time data feeds. It is primarily used for messaging, log aggregation, and real-time data pipelines.

Key Features:

- Message Broker: Kafka acts as a high-throughput, fault-tolerant message broker, allowing systems to publish and subscribe to streams of records.

- Durability and Fault Tolerance: Kafka ensures data durability by persisting messages on disk and replicating them across multiple brokers.

- Scalability: Kafka is designed to scale horizontally by adding more brokers to handle increased load.

- Low Latency: Kafka provides low-latency message delivery, suitable for real-time data processing.

- Decoupling Systems: Kafka decouples data producers from consumers, enabling independent scaling and evolution of different parts of a data architecture.

- Multiple Consumers: A single stream of data can be consumed by multiple applications for various use cases.

Common Use Cases:

- Real-time analytics

- Log aggregation

- Stream processing pipelines

- Event sourcing

Apache Flink

Purpose: Flink is a stream processing framework that excels at complex event processing and real-time analytics. It is designed for high-performance, low-latency stream and batch data processing.

Key Features:

- Stream Processing: Flink provides robust support for stateful stream processing, allowing for real-time data transformation and analytics.

- Low Latency: Flink is optimized for low-latency processing, enabling near-instantaneous analysis and actions on data streams.

- Fault Tolerance: Flink’s checkpointing mechanism ensures state consistency and recovery in case of failures.

- Scalability: Flink can scale horizontally to handle large volumes of data by adding more nodes to the cluster.

- Complex Event Processing: Flink supports complex event processing (CEP) with its powerful event pattern matching capabilities.

- Batch Processing: In addition to stream processing, Flink can handle batch data processing, making it versatile for various data workloads.

Common Use Cases:

- Real-time data analytics

- Stream and batch data processing

- Complex event processing

- Machine learning pipelines

- Data enrichment

Comparison Summary

- Functionality: Kafka is primarily a message broker and event streaming platform, while Flink is a stream processing framework designed for complex event processing and real-time analytics.

- Use Cases: Kafka is used for data ingestion, buffering, and event storage, whereas Flink is used for processing and analyzing data streams in real-time.

- Integration: Kafka and Flink are often used together, where Kafka handles data ingestion and Flink processes the ingested data in real-time.

- Scalability and Fault Tolerance: Both systems are highly scalable and fault-tolerant, but they achieve this through different mechanisms tailored to their specific use cases.

In summary, Apache Kafka and Apache Flink serve distinct but complementary roles in a modern data architecture. Kafka is ideal for real-time data streaming and event storage, while Flink excels at processing and analyzing those streams in real-time. Using them together leverages the strengths of both platforms for building robust, scalable, and real-time data-driven applications.

Environment Specification

We are using a minimal CentOS 8 KVM machine with following specifications.

- CPU – 3.4 Ghz (2 cores)

- Memory – 2 GB

- Storage – 20 GB

- Operating System – CentOS 8.2

- Hostname – kafka-01.centlinux.com

- IP Address – 192.168.116.234 /24

For a smooth Apache Kafka installation and experimentation on CentOS 8, ensure your system meets the minimum hardware and network requirements. A lightweight yet powerful Mini PC offers an excellent local environment for hands-on Linux server practice without needing a full desktop setup.

[Limited Time Mini PC Offers – Click and Save!]

Alternatively, a reliable VPS like Hostinger provides flexible cloud-based resources accessible from anywhere, perfect for scalable Kafka deployment and testing.

[Power Your Projects with Hostinger VPS – Join Here!]

Both options are affordable and optimized for Linux workloads, making them ideal for beginners and professionals alike. If you’re interested, check out these Mini PC and Hostinger VPS options through the affiliate links above to support this blog at no extra cost to you.

Disclaimer: This post contains affiliate links. If you purchase through these links, I may earn a small commission at no additional cost to you. Your support helps keep this content free and up to date.

Read Also: How to install Apache Solr Server on CentOS 8

Update your Linux Operating System

Connect with kafka-01.centlinux.com as root user with the help of a ssh client.

Update installed sofware packages on your Linux operating system. We are using CentOS Linux in this installation guide, therefore, you can use dnf command for this purpose.

dnf update -yCheck the Linux operating system and Kernel version that was used in this installation guide.

uname -r

cat /etc/redhat-releaseOutput:

4.18.0-193.28.1.el8_2.x86_64

CentOS Linux release 8.2.2004 (Core)

Install Java on CentOS 8

Apache Kafka is built using Java programming language, therefore it requires Java Development Kit 8 or later.

JDK 11 is available in standard yum repositories, therefore, you can install JDK 11 by executing following Linux command.

dnf install -y java-11-openjdkInstall Apache Kafka on CentOS 8

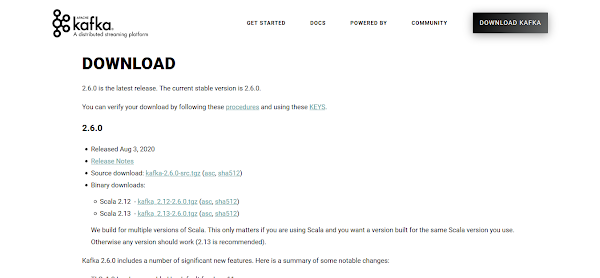

Kafka server is distributed under Apache License 2.0, therefore you can download this software from their offical website.

Copy the URL of your required version of Apache Kafka software from this webpage.

Use the copied URL with wget command to download the Apache Kafka software directly from Linux command line.

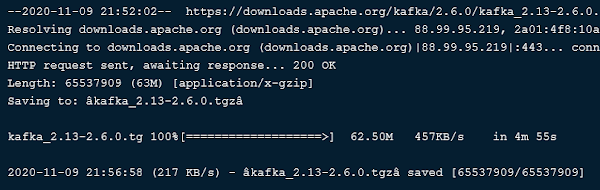

cd /tmp

wget https://downloads.apache.org/kafka/2.6.0/kafka_2.13-2.6.0.tgz

Extract downloaded tarball by using tar command.

tar xzf kafka_2.13-2.6.0.tgzNow, move the extracted files to /opt/kafka directory.

mv kafka_2.13-2.6.0 /opt/kafkaInstall ZooKeeper on CentOS 8

Current versions of Kafka server requires Zookeeper service for distributed configurations. However, it is mentioned in Kafka documentation that

“Soon, ZooKeeper will no longer be required by Apache Kafka.”

But for now, you have to install Apache Zookeeper service before Kafka server.

Zookeeper binary scripts are provided with Kafka setup files. You can use it to configure ZooKeeper server.

Create a systemd service unit for Apache Zookeeper.

cd /opt/kafka/

vi /etc/systemd/system/zookeeper.serviceAdd following directives in this file.

[Unit]

Description=Apache Zookeeper server

Documentation=http://zookeeper.apache.org

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

ExecStart=/usr/bin/bash /opt/kafka/bin/zookeeper-server-start.sh /opt/kafka/config/zookeeper.properties

ExecStop=/usr/bin/bash /opt/kafka/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.targetCreate Systemd Service for Apache Kafka

Similarly, create a systemd service unit for Kafka server.

vi /etc/systemd/system/kafka.serviceAdd following directives therein.

[Unit]

Description=Apache Kafka Server

Documentation=http://kafka.apache.org/documentation.html

Requires=zookeeper.service

[Service]

Type=simple

Environment="JAVA_HOME=/usr/lib/jvm/jre-11-openjdk"

ExecStart=/usr/bin/bash /opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

ExecStop=/usr/bin/bash /opt/kafka/bin/kafka-server-stop.sh

[Install]

WantedBy=multi-user.targetEnable and start Apache Zookeeper and Kafka services.

systemctl daemon-reload

systemctl enable --now zookeeper.serviceVerify the status of Apache Kafka service.

systemctl status kafka.service

Create a Topic in Apache Kafka Server

Create a topic in your Apache Kafka server.

/opt/kafka/bin/kafka-topics.sh --create --topic centlinux --bootstrap-server localhost:9092Output:

Created topic centlinux.

To view the details of the topic, you can use run following script at the Linux command line.

/opt/kafka/bin/kafka-topics.sh --describe --topic centlinux --bootstrap-server localhost:9092Output:

Topic: centlinux PartitionCount: 1 ReplicationFactor: 1 Configs: segment.bytes=1073741824

Topic: centlinux Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Add some sample events in your topic.

/opt/kafka/bin/kafka-console-producer.sh --topic centlinux --bootstrap-server localhost:9092Output:

>This is the First event.

>This is the Second event.

>This is the Third event.

>^C#

To view all the events that are inserted into a topic, you can execute following script at Linux command line.

/opt/kafka/bin/kafka-console-consumer.sh --topic centlinux --from-beginning --bootstrap-server localhost:9092Output:

This is the First event.

This is the Second event.

This is the Third event.

^CProcessed a total of 3 messages

Apache Kafka is successfully installed on CentOS / RHEL 8 and the bootstrap server is running at port 9092.

Final Thoughts

Installing Apache Kafka on CentOS 8 can greatly enhance your data streaming capabilities. By following this guide, you should now have Kafka successfully installed and configured.

Need expert AWS and Linux system administration? From cloud architecture to server optimization, I provide reliable and efficient solutions tailored to your needs. Hire me today!

FAQs

1. Can I run Apache Kafka on CentOS 8 without Java?

No, Apache Kafka requires Java to run, so you must install a compatible JDK like OpenJDK 11 or higher before installing Kafka.

2. How do I change the default Kafka broker ID?

You can set the broker ID in the server.properties configuration file by modifying the broker.id parameter to a unique integer.

3. What firewall ports need to be open for Kafka on CentOS 8?

By default, you need to open TCP port 9092 for Kafka broker communication and optionally port 2181 if using a local ZooKeeper.

4. Is ZooKeeper mandatory for Kafka on CentOS 8?

For Kafka versions before 3.0, ZooKeeper is required to manage the cluster; newer Kafka releases support KRaft mode, which removes the ZooKeeper dependency.

5. How do I troubleshoot Kafka startup failures on CentOS 8?

Check Kafka log files in the logs directory, ensure Java is correctly installed, verify port availability, and confirm the ZooKeeper service is running (if applicable).

Recommended Courses

If you’re serious about leveling up your Linux skills, I highly recommend the Linux Mastery: Master the Linux Command Line in 11.5 Hours by Ziyad Yehia course. It’s a practical, beginner-friendly program that takes you from the basics to advanced command line usage with clear explanations and hands-on exercises. Whether you’re a student, sysadmin, or developer, this course will help you build the confidence to navigate Linux like a pro.

👉 Enroll now through my affiliate link and start mastering the Linux command line today!

Disclaimer: This post contains affiliate links. If you purchase through these links, I may earn a small commission at no extra cost to you, which helps support this blog.

Leave a Reply

You must be logged in to post a comment.