Learn how AWK command, the Swiss Army knife of text processing in Linux, can simplify tasks like extracting columns, performing calculations, and manipulating data. #centlinux #linux #awk

Table of Contents

Introduction

When it comes to text processing in Linux, few tools are as powerful and versatile as AWK command. AWK is a command-line utility used for pattern scanning and processing. It’s especially useful for processing and analyzing text files, whether you’re working with log files, CSV files, or complex data. AWK is widely recognized for its elegance and efficiency in manipulating data from files, standard input, or pipelines.

In this blog post, we’ll dive into the AWK command, its uses, code examples, and why it’s often referred to as the Swiss Army knife of text processing. We’ll also compare it with other common Linux tools like grep, sed, and cut, and wrap up with a handy FAQ section.

What is AWK Command?

AWK is a programming language designed for text processing, specifically for scanning files line by line and performing actions on each line based on patterns. Named after its creators Alfred Aho, Peter Weinberger, and Brian Kernighan, AWK is ideal for extracting, manipulating, and analyzing data from structured text.

At its core, AWK command operates with a simple syntax:

awk 'pattern { action }' fileHere’s the breakdown:

- pattern: A condition to match lines in the input file (e.g., a word, a number, or a regular expression).

- action: A command to perform when the pattern matches (e.g., print a value, modify the data, etc.).

A Basic Example

Let’s start simple. Say you have a file students.txt containing names and grades, like so:

Alice 90

Bob 85

Charlie 88

David 92To print the entire content of the file, you can use:

awk '{print $0}' students.txtThe $0 represents the entire line, so the output will be:

Alice 90

Bob 85

Charlie 88

David 92This basic usage of AWK command helps you get started with processing text data in Linux.

Common Use Cases for AWK

1. Extracting Specific Columns

AWK’s ability to handle columns makes it great for extracting specific pieces of data. For example, to print the names (first column) from the students.txt file:

awk '{print $1}' students.txtOutput:

Alice

Bob

Charlie

DavidThis AWK command variation will extract the first field (separated by whitespace) from each line.

2. Field Separator Customization

AWK command can easily handle different field separators. By default, AWK uses whitespace (spaces or tabs) to separate fields, but you can specify a custom separator. For example, if you have a CSV file:

Alice,90

Bob,85

Charlie,88

David,92You can use a comma as the field separator:

awk -F ',' '{print $1}' students.csvThis will output:

Alice

Bob

Charlie

David3. Conditional Statements

AWK command supports if-else statements, which lets you filter and manipulate data based on conditions. For example, to print only students with a grade above 90:

awk '$2 > 90 {print $1}' students.txtOutput:

DavidThis filters out anyone whose grade is not greater than 90 and prints only the names of those who meet the condition.

4. Performing Calculations

AWK command can perform calculations on numeric data. For instance, to calculate the average grade of all students:

awk '{sum += $2} END {print "Average grade:", sum/NR}' students.txtExplanation:

sum += $2: Adds the grade (second column) to thesumvariable.END {print sum/NR}: After processing all lines, prints the average, whereNRis the number of records (lines) processed.

Output:

Average grade: 88.755. String Manipulation

AWK command has built-in string manipulation functions. Let’s say you want to extract the first letter of each student’s name:

awk '{print substr($1, 1, 1)}' students.txtOutput:

A

B

C

DHere, substr($1, 1, 1) extracts the first character from the first field (name).

Using Regex with AWK Command

Here are three advanced examples of AWK command usage with regular expressions, using the /etc/passwd file (which contains information about system users) for text processing:

1. Filter Users with Specific Shells

Let’s say you want to extract the users who are using /bin/bash as their shell. The /etc/passwd file contains user information in the following format:

username:x:UID:GID:full name:home directory:shellIn this example, we will use AWK command with a regular expression to filter out users whose shell is /bin/bash:

awk -F: '$7 ~ /\/bin\/bash/ {print $1, $7}' /etc/passwdExplanation:

-F:sets the field separator to a colon (:).$7refers to the 7th field, which is the user’s shell.~ /\/bin\/bash/uses a regular expression to match the/bin/bashshell (escaping the/character).{print $1, $7}prints the username and shell.

Example Output:

root /bin/bash

user1 /bin/bash

user2 /bin/bash2. Count Users in a Specific Group

If you want to count how many users belong to a specific group (e.g., users in the staff group), you can use a regular expression to match the group name in the /etc/passwd file.

awk -F: '$4 ~ /1001/ {count++} END {print "Users in group 1001:", count}' /etc/passwdExplanation:

$4refers to the GID (Group ID) field in/etc/passwd.~ /1001/matches any line where the GID is1001.count++increments the counter for each user in the group.END {print ...}prints the result after processing all lines.

Example Output:

Users in group 1001: 53. List All Users with Specific Patterns in Their Username

If you want to find all users whose usernames contain the pattern admin (case-insensitive), you can use a regular expression with AWK command.

awk -F: '$1 ~ /admin/i {print $1, $3}' /etc/passwdExplanation:

$1refers to the username field.~ /admin/iis a regular expression to match any username containing “admin” (theiflag makes it case-insensitive).{print $1, $3}prints the username and the user ID (UID).

Example Output:

admin 1001

administrator 1002These examples demonstrate how AWK command with regular expressions can be a powerful tool for text processing, especially when working with system files like /etc/passwd.

AWK vs. Other Linux Commands

Let’s compare AWK command with other popular text processing tools like grep, sed, and cut.

awk vs. grep

- grep: Primarily used for searching patterns in text. It works line by line and returns lines that match the pattern.

- awk: More versatile, as it allows pattern matching and text manipulation. While

greponly outputs matching lines, AWK can perform actions like printing specific columns, performing calculations, and modifying text.

Example:

grep to find lines with “Alice”:

grep 'Alice' students.txtAWK to print the grade of Alice:

awk '$1 == "Alice" {print $2}' students.txtawk vs. sed

- sed: A stream editor that is used for text transformation and basic text manipulation.

- awk: While

sedcan perform basic replacements, AWK is better for structured text processing with its ability to handle fields and patterns.

For example, replacing the name “Alice” with “Alicia”:

sed 's/Alice/Alicia/' students.txtAWK can do something similar but is more useful for processing complex files with structured data.

awk vs. cut

- cut: Used to extract specific columns from a file based on a delimiter.

- awk: More powerful as it can handle multiple conditions, perform arithmetic, and process entire lines or fields.

For example, extracting the first field using cut:

cut -d ' ' -f 1 students.txtAWK command can do this and much more in one go, as shown earlier.

If you’re serious about leveling up your Linux skills, I highly recommend the Linux Mastery: Master the Linux Command Line in 11.5 Hours by Ziyad Yehia course. It’s a practical, beginner-friendly program that takes you from the basics to advanced command line usage with clear explanations and hands-on exercises. Whether you’re a student, sysadmin, or developer, this course will help you build the confidence to navigate Linux like a pro.

👉 Enroll now through my affiliate link and start mastering the Linux command line today!

Disclaimer: This post contains affiliate links. If you purchase through these links, I may earn a small commission at no extra cost to you, which helps support this blog.

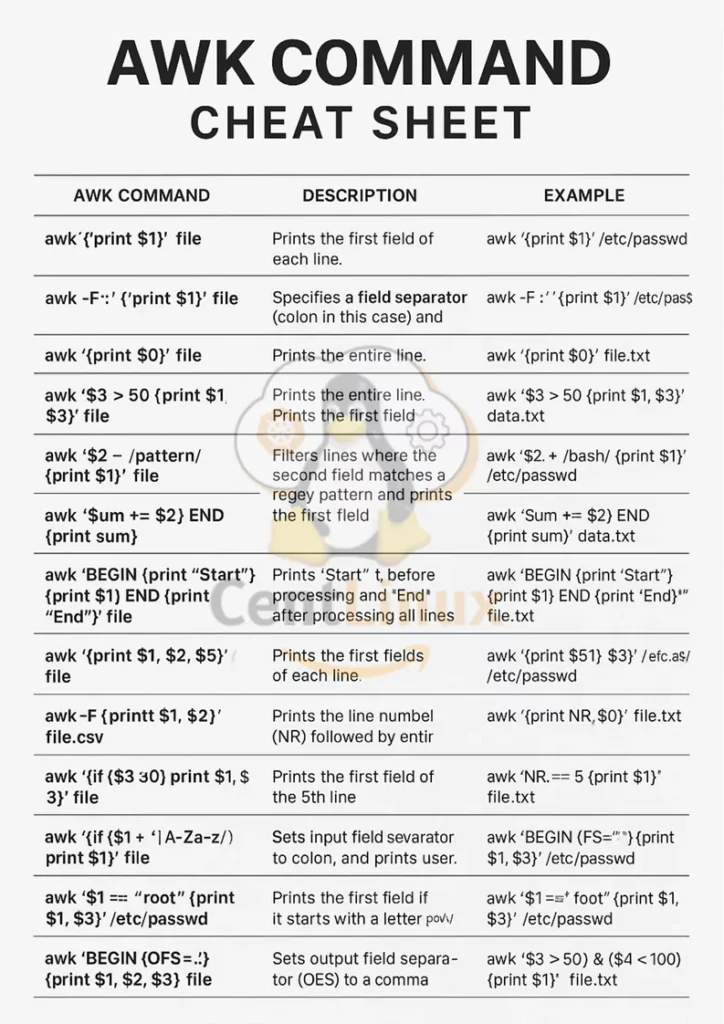

AWK Cheat Sheet

Here’s a handy AWK Cheat Sheet in a table format to quickly reference common AWK commands and their usage:

| AWK Command | Description | Example |

|---|---|---|

awk '{print $1}' file | Prints the first field of each line. | awk '{print $1}' /etc/passwd |

awk -F ':' '{print $1}' file | Specifies a field separator (colon in this case) and prints the first field. | awk -F ':' '{print $1}' /etc/passwd |

awk '{print $0}' file | Prints the entire line. | awk '{print $0}' file.txt |

awk '$3 > 50 {print $1, $3}' file | Prints the first and third fields if the third field is greater than 50. | awk '$3 > 50 {print $1, $3}' data.txt |

awk '$2 ~ /pattern/ {print $1}' file | Filters lines where the second field matches a regex pattern and prints the first field. | awk '$2 ~ /bash/ {print $1}' /etc/passwd |

awk '{sum += $2} END {print sum}' file | Sums the second field and prints the total after processing all lines. | awk '{sum += $2} END {print sum}' data.txt |

awk 'BEGIN {print "Start"} {print $1} END {print "End"}' file | Prints a “Start” before processing and “End” after processing all lines. | awk 'BEGIN {print "Start"} {print $1} END {print "End"}' file.txt |

awk '{print $1, $2, $3}' file | Prints the first three fields of each line. | awk '{print $1, $2, $3}' /etc/passwd |

awk -F, '{print $1, $2}' file.csv | Uses a comma as the field separator and prints the first two fields. | awk -F, '{print $1, $2}' file.csv |

awk '{if ($3 > 50) print $1, $3}' file | Prints the first and third fields only if the third field is greater than 50. | awk '{if ($3 > 50) print $1, $3}' data.txt |

awk -F: '{if ($3 > 1000) print $1}' /etc/passwd | Prints usernames (first field) if the UID (third field) is greater than 1000. | awk -F: '{if ($3 > 1000) print $1}' /etc/passwd |

awk '{print $NF}' file | Prints the last field of each line (NF is the number of fields). | awk '{print $NF}' file.txt |

awk '{print NR, $0}' file | Prints the line number (NR) followed by the entire line. | awk '{print NR, $0}' file.txt |

awk 'NR == 5 {print $1}' file | Prints the first field of the 5th line. | awk 'NR == 5 {print $1}' file.txt |

awk 'BEGIN {FS=":"} {print $1, $3}' /etc/passwd | Sets the input field separator to colon and prints the username and UID. | awk 'BEGIN {FS=":"} {print $1, $3}' /etc/passwd |

awk '{if ($1 ~ /^[A-Za-z]/) print $1}' file | Prints the first field if it starts with a letter (regex match). | awk '{if ($1 ~ /^[A-Za-z]/) print $1}' data.txt |

awk '$1 == "root" {print $1, $3}' /etc/passwd | Prints the username and UID for the user “root”. | awk '$1 == "root" {print $1, $3}' /etc/passwd |

awk 'BEGIN {OFS=","} {print $1, $2, $3}' file | Sets the output field separator (OFS) to a comma and prints the first three fields. | awk 'BEGIN {OFS=","} {print $1, $2, $3}' file.txt |

awk '($3 > 50) && ($4 < 100) {print $1}' file | Filters lines where the third field is greater than 50 and the fourth is less than 100, and prints the first field. | awk '($3 > 50) && ($4 < 100) {print $1}' data.txt |

awk 'BEGIN {FS=":"; OFS="\t"} {print $1, $3}' /etc/passwd | Sets both input field separator (FS) and output field separator (OFS), then prints username and UID. | awk 'BEGIN {FS=":"; OFS="\t"} {print $1, $3}' /etc/passwd |

Common AWK Variables:

FS: Field Separator (defaults to whitespace).OFS: Output Field Separator (defaults to space).NR: Number of Records (line number).NF: Number of Fields (fields in the current record).$1, $2, ...: Refers to the first, second, etc., field in a line.$0: Refers to the entire line.

This cheat sheet summarizes the most commonly used AWK commands, helping you quickly navigate through text processing tasks. Whether you’re filtering data, performing calculations, or customizing input/output formats, AWK command can handle a wide variety of tasks in a simple and efficient way.

A downloadable version of AWK command Cheat Sheet is also provided below for your offline reference.

Read Also: Understand Linux PAM with Examples

Conclusion

AWK command is an incredibly powerful tool for text processing, offering more flexibility and capability than many other tools available in Linux. Whether you’re extracting columns from a CSV file, performing calculations, or transforming data, AWK can handle it with ease. Its simple syntax and wide range of use cases make it an invaluable tool for anyone working with text-based data.

Your Linux servers deserve expert care! I provide reliable management and optimization services tailored to your needs. Discover how I can help!

FAQs

1. What is the meaning of awk '{print $0}'?

$0 refers to the entire line of input. Using print $0 will print the whole line.

2. Can AWK command handles regular expressions?

Yes, AWK command can use regular expressions in pattern matching, making it even more powerful for filtering and transforming data.

3. What is the difference between awk and sed?

While both are used for text manipulation, sed is a stream editor focused on editing text in a stream, while AWK command is designed for more complex text processing tasks, including field handling, arithmetic, and reporting.

4. How do I change the field separator in AWK?

Use the -F option followed by the delimiter. For example, to set a comma as the field separator, use:

awk -F ',' '{print $1}' file.csv5. Can I use AWK command to process binary files?

AWK command is not designed for binary files. It works best with text files. For binary files, you would need specialized tools.

Leave a Reply

Please log in to post a comment.