Learn how to seamlessly install Apache Spark on Rocky Linux 9 with our step-by-step guide. Unlock the power of distributed computing and data processing with this comprehensive tutorial, tailored for smooth integration and optimal performance on your Linux environment. #centlinux #linux #ApacheSpark

Table of Contents

What is Apache Spark?

Apache Spark is an open-source unified analytics engine for large-scale data processing. Spark provides an interface for programming clusters with implicit data parallelism and fault tolerance. Originally developed at the University of California, Berkeley’s AMPLab, the Spark codebase was later donated to the Apache Software Foundation, which has maintained it since.

Apache Spark has its architectural foundation in the resilient distributed dataset (RDD), a read-only multiset of data items distributed over a cluster of machines, that is maintained in a fault-tolerant way. The Dataframe API was released as an abstraction on top of the RDD, followed by the Dataset API. In Spark 1.x, the RDD was the primary application programming interface (API), but as of Spark 2.x use of the Dataset API is encouraged even though the RDD API is not deprecated. The RDD technology still underlies the Dataset API.

Spark and its RDDs were developed in 2012 in response to limitations in the MapReduce cluster computing paradigm, which forces a particular linear dataflow structure on distributed programs: MapReduce programs read input data from disk, map a function across the data, reduce the results of the map, and store reduction results on disk. Spark’s RDDs function as a working set for distributed programs that offers a (deliberately) restricted form of distributed shared memory.

Inside Apache Spark the workflow is managed as a directed acyclic graph (DAG). Nodes represent RDDs while edges represent the operations on the RDDs. (Source: Wikipedia)

Apache Spark Alternatives

Certainly! While Apache Spark is a powerful and widely used distributed computing framework, there are several alternatives available, each with its own unique features and strengths. Here are some notable alternatives to Apache Spark:

- Hadoop MapReduce: MapReduce is the classic distributed processing framework that inspired Apache Spark. It’s part of the Apache Hadoop project and is known for its reliability and scalability. While not as feature-rich or flexible as Spark, MapReduce is still widely used in certain contexts.

- Apache Flink: Apache Flink is another open-source stream processing framework that offers both batch and stream processing capabilities. It provides low-latency processing, fault tolerance, and native support for event time processing, making it suitable for real-time analytics and event-driven applications.

- Apache Storm: Apache Storm is a real-time stream processing system designed for high-throughput, fault-tolerant processing of large volumes of data. It’s particularly well-suited for use cases requiring low latency and event-driven processing, such as real-time analytics and stream processing pipelines.

- Databricks Delta: Databricks Delta is a unified data management system built on top of Apache Spark. It provides ACID transactions, data versioning, and schema enforcement, making it suitable for building reliable data pipelines and data lakes.

- Google Cloud Dataflow: Google Cloud Dataflow is a fully managed stream and batch processing service offered by Google Cloud Platform. It provides a unified programming model for both batch and stream processing, along with features like auto-scaling and serverless execution.

- Apache Beam: Apache Beam is an open-source unified programming model for batch and stream processing. It supports multiple execution engines, including Apache Spark, Apache Flink, and Google Cloud Dataflow, allowing users to write portable data processing pipelines.

These are just a few examples of alternatives to Apache Spark, each offering unique features and capabilities suited to different use cases and requirements. When choosing a distributed computing framework, consider factors such as scalability, fault tolerance, real-time processing capabilities, and integration with existing systems and tools.

Apache Spark vs Kafka

Apache Spark and Apache Kafka are both popular distributed computing platforms, but they serve different purposes and excel in different areas. Here’s a comparison between the two:

Purpose:

- Apache Spark: Apache Spark is primarily a distributed data processing framework. It’s designed for performing complex data processing tasks such as batch processing, real-time stream processing, machine learning, and graph processing.

- Apache Kafka: Apache Kafka is a distributed event streaming platform. It’s designed for building real-time data pipelines and streaming applications, enabling high-throughput, fault-tolerant messaging and storage of large volumes of data streams.

Use Cases:

- Apache Spark: Apache Spark is commonly used for data analytics, ETL (Extract, Transform, Load) processes, machine learning, and interactive querying. It’s suitable for scenarios where complex data transformations and analytics are required.

- Apache Kafka: Apache Kafka is used for building real-time data pipelines, event-driven architectures, log aggregation, and stream processing. It’s ideal for scenarios where data needs to be ingested, processed, and distributed in real-time.

Architecture:

- Apache Spark: Apache Spark follows a distributed computing model with a master-slave architecture. It utilizes in-memory processing and data parallelism to perform computations efficiently.

- Apache Kafka: Apache Kafka is designed as a distributed messaging system with a distributed commit log architecture. It uses partitions and replication to achieve high scalability and fault tolerance.

Data Processing Model:

- Apache Spark: Apache Spark supports both batch processing and stream processing. It provides high-level APIs for batch processing (e.g., Spark SQL, DataFrame API) and stream processing (e.g., Spark Streaming, Structured Streaming).

- Apache Kafka: Apache Kafka is primarily focused on stream processing. It provides APIs and libraries for building stream processing applications, consuming and processing data in real-time.

Integration:

- Apache Spark: Apache Spark can integrate with Apache Kafka for stream processing tasks. It provides connectors and libraries for reading data from Kafka topics and processing it using Spark Streaming or Structured Streaming.

- Apache Kafka: Apache Kafka can be used as a data source or sink for Apache Spark applications. Spark can consume data from Kafka topics, process it, and write the results back to Kafka or other storage systems.

In summary, while Apache Spark and Apache Kafka are both powerful distributed computing platforms, they serve different purposes and are often used together in complementary ways. Spark is focused on data processing and analytics, while Kafka is focused on real-time data streaming and event-driven architectures. Depending on your requirements, you may choose to use one or both of these platforms in your data processing pipelines.

Environment Specification

We are using a minimal Rocky Linux 9 virtual machine with following specifications.

- CPU – 3.4 Ghz (2 cores)

- Memory – 2 GB

- Storage – 20 GB

- Operating System – Rocky Linux release 9.1 (Blue Onyx)

- Hostname – spark-01.centlinux.com

- IP Address – 192.168.88.136/24

For experimenting with Apache Spark and other Linux server technologies, having a reliable home lab environment is essential. A compact Mini PC provides a cost-effective and energy-efficient way to run Rocky Linux 9 locally with full control over your setup.

[Start Your DevOps Lab with a Mini PC – Order Today!]

Alternatively, a VPS from Rose Hosting offers a powerful, always-on Linux server in the cloud, perfect for remote access and scalable testing.

[Launch Your Own VPS with Rose Hosting – Click to Get Started!]

Both options make great platforms to learn, develop, and test Apache Spark workloads without investing in expensive hardware. You can find links to recommended Mini PCs and Rose Hosting VPS plans above to get started easily.

Disclaimer: Some of the links above are affiliate links, meaning I may earn a small commission at no extra cost to you if you make a purchase through them. This support helps keep the blog running and allows me to continue sharing useful technical content.

Update your Rocky Linux Server

By using a ssh client, login to your Rocky Linux server as root user.

Set a Fully Qualified Domain Name (FQDN) and Local Name Resolution for your Linux machine.

hostnamectl set-hostname spark-01.centlinux.com

echo "192.168.88.136 spark-01 spark-01.centlinux.com" >> /etc/hostsExecute following commands to refresh your Yum cache and update software packages in your Rocky Linux server.

dnf makecache

dnf update -yIf above commands update your Linux Kernel and you should reboot your Linux operating system with new Kernel before setup Apache Spark software.

rebootAfter reboot, check the Linux operating system and Kernel versions.

uname -r

cat /etc/rocky-releaseOutput:

5.14.0-162.18.1.el9_1.x86_64

Rocky Linux release 9.1 (Blue Onyx)

Setup Apache Spark Prerequisites

Apache Spark is written in Scala programming language; thus it requires Scala support for deployment. Whereas Scala requires Java language support.

There are some other software packages that you may require to download and install Apache Spark software.

Therefore, you can install all these packages in a single shot of dnf command.

dnf install -y wget gzip tar java-17-openjdkAfter installation, verify the version of active Java.

java --versionOutput:

openjdk 17.0.6 2023-01-17 LTS

OpenJDK Runtime Environment (Red_Hat-17.0.6.0.10-3.el9_1) (build 17.0.6+10-LTS)

OpenJDK 64-Bit Server VM (Red_Hat-17.0.6.0.10-3.el9_1) (build 17.0.6+10-LTS, mixed mode, sharing)

Install Scala Programming Language

Although, we have already written a complete tutorial on installation of Scala on Rocky Linux 9. But we are repeating most necessary steps here for the sake of completeness of this article.

Download Coursier Setup by executing following wget command.

wget https://github.com/coursier/launchers/raw/master/cs-x86_64-pc-linux.gzUnzip downloaded Coursier Setup file by using gunzip command.

gunzip cs-x86_64-pc-linux.gzRename extracted file to cs for convenience and grant execute permissions on this file.

mv cs-x86_64-pc-linux cs

chmod +x csExecute Coursier Setup file to initiate installation of Scala programming language.

./cs setupOutput:

Checking if a JVM is installed

Found a JVM installed under /usr/lib/jvm/java-17-openjdk-17.0.6.0.10-3.el9_1.x86_64.

Checking if ~/.local/share/coursier/bin is in PATH

Should we add ~/.local/share/coursier/bin to your PATH via ~/.profile, ~/.bash_profile? [Y/n] Y

Checking if the standard Scala applications are installed

Installed ammonite

Installed cs

Installed coursier

Installed scala

Installed scalac

Installed scala-cli

Installed sbt

Installed sbtn

Installed scalafmt

Execute ~/.bash_profile once to setup environment for your current session.

source ~/.bash_profileCheck the version of Scala software.

scala -versionOutput:

Scala code runner version 3.2.2 -- Copyright 2002-2023, LAMP/EPFL

Install Apache Spark on Rocky Linux 9

Apache Spark is a free software, thus it is available to download at their official website.

You can copy the download link of Apache Spark software and then use it with wget command to download this open-source analytics engine.

wget https://dlcdn.apache.org/spark/spark-3.3.2/spark-3.3.2-bin-hadoop3.tgzUse tar command to extract Apache Spark software and then use mv command to move it to /opt directory.

tar xf spark-3.3.2-bin-hadoop3.tgz

mv spark-3.3.2-bin-hadoop3 /opt/sparkCreate a file in /etc/profile.d directory to setup environment for Apache Spark during session startup.

echo "export SPARK_HOME=/opt/spark" >> /etc/profile.d/spark.sh

echo "export PATH=$PATH:/opt/spark/bin:/opt/spark/sbin" >> /etc/profile.d/spark.shCreate a user spark and grant ownership of Apache Spark software to this user.

useradd spark

chown -R spark:spark /opt/sparkConfigure Linux Firewall

Apache Spark uses a master-slave architecture. The Spark master distributes tasks among Spark Slave services, which can exist on the same or other Apache Spark nodes.

Allow the default service ports of Apache Spark Master and Apache Spark Worker nodes in Linux Firewall.

firewall-cmd --permanent --add-port=6066/tcp

firewall-cmd --permanent --add-port=7077/tcp

firewall-cmd --permanent --add-port=8080-8081/tcp

firewall-cmd --reloadCreate Systemd Services

Create a systemd service for Spark Master by using vim text editor.

vi /etc/systemd/system/spark-master.serviceAdd following directives in this file.

[Unit]

Description=Apache Spark Master

After=network.target

[Service]

Type=forking

User=spark

Group=spark

ExecStart=/bin/bash /opt/spark/sbin/start-master.sh

ExecStop=/opt/spark/sbin/stop-master.sh

[Install]

WantedBy=multi-user.targetEnable and start Spark Master service.

systemctl enable --now spark-master.serviceCheck the status for Spark Master service.

systemctl status spark-master.serviceOutput:

● spark-master.service - Apache Spark Master

Loaded: loaded (/etc/systemd/system/spark-master.service; enabled; vendor >

Active: active (running) since Tue 2023-03-07 22:13:13 PKT; 4min 13s ago

Main PID: 6856 (java)

Tasks: 29 (limit: 10904)

Memory: 175.8M

CPU: 5.490s

CGroup: /system.slice/spark-master.service

└─6856 /usr/lib/jvm/java-17-openjdk-17.0.6.0.10-3.el9_1.x86_64/bin>

Mar 07 22:13:11 spark-01.centlinux.com systemd[1]: Starting Apache Spark Master>

Mar 07 22:13:11 spark-01.centlinux.com bash[6850]: starting org.apache.spark.de>

Mar 07 22:13:13 spark-01.centlinux.com systemd[1]: Started Apache Spark Master.

Create a systemd service for Spark Slave by using vim text editor.

vi /etc/systemd/system/spark-slave.serviceAdd following directives in this file.

[Unit]

Description=Apache Spark Slave

After=network.target

[Service]

Type=forking

User=spark

Group=spark

ExecStart=/bin/bash /opt/spark/sbin/start-slave.sh spark://192.168.200.46:7077

ExecStop=/bin/bash /opt/spark/sbin/stop-slave.sh

[Install]

WantedBy=multi-user.targetEnable and start Spark Slave service.

systemctl enable --now spark-slave.serviceCheck the status of Apache Slave service.

systemctl status spark-slave.serviceOutput:

● spark-slave.service - Apache Spark Slave

Loaded: loaded (/etc/systemd/system/spark-slave.service; enabled; vendor p>

Active: active (running) since Tue 2023-03-07 22:16:33 PKT; 34s ago

Process: 6937 ExecStart=/bin/bash /opt/spark/sbin/start-slave.sh spark://19>

Main PID: 6950 (java)

Tasks: 33 (limit: 10904)

Memory: 121.0M

CPU: 5.022s

CGroup: /system.slice/spark-slave.service

└─6950 /usr/lib/jvm/java-17-openjdk-17.0.6.0.10-3.el9_1.x86_64/bin>

Mar 07 22:16:30 spark-01.centlinux.com systemd[1]: Starting Apache Spark Slave.>

Mar 07 22:16:30 spark-01.centlinux.com bash[6937]: This script is deprecated, u>

Mar 07 22:16:31 spark-01.centlinux.com bash[6944]: starting org.apache.spark.de>

Mar 07 22:16:33 spark-01.centlinux.com systemd[1]: Started Apache Spark Slave.

Access Apache Spark Server

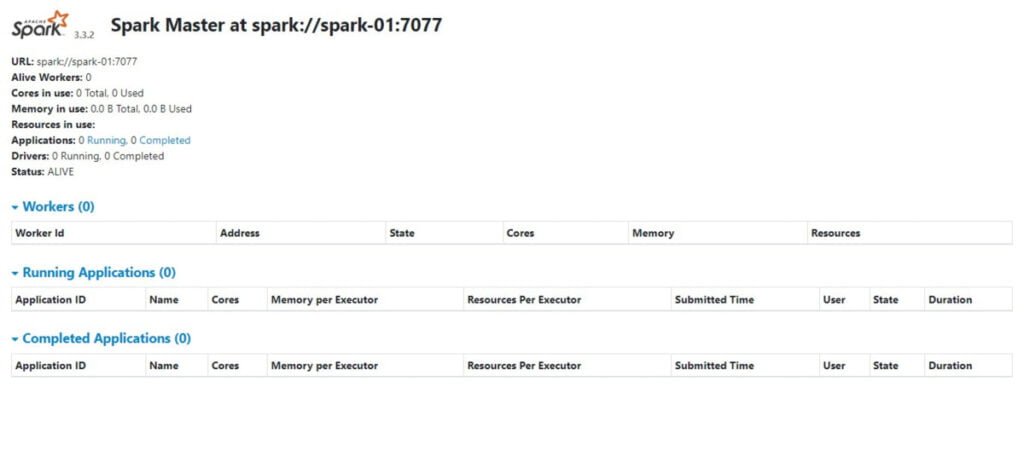

To access Apache Master Dashboard, open URL http://spark-01.centlinux.com:8080 in a web browser.

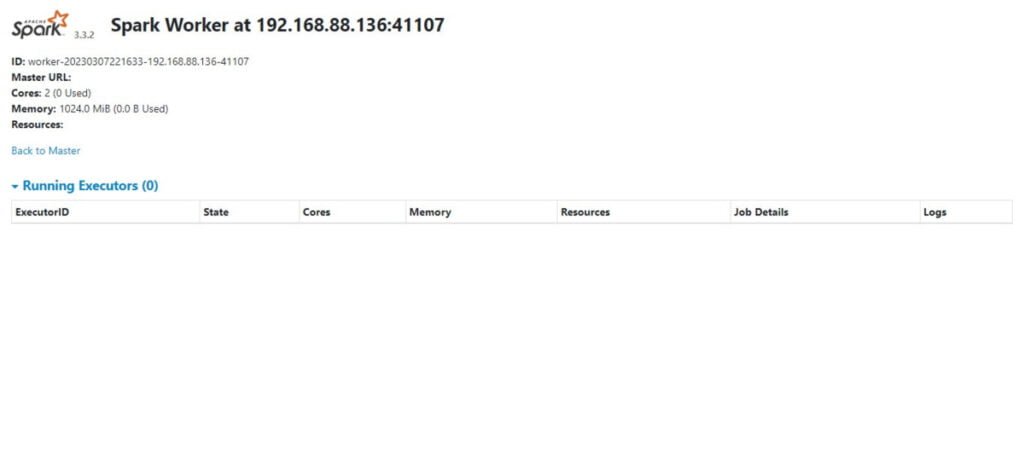

Similarly, you can access Apache Slave Dashboard by opening URL http://spark-01.centlinux.com:8081 in a web browser.

Video Tutorial

Final Thoughts

Equip yourself with the knowledge to effortlessly install Apache Spark on Rocky Linux 9 and embark on a journey of distributed computing excellence. Harness the full potential of your data processing tasks with this powerful framework, paving the way for scalable and efficient solutions tailored to your needs.

Optimize your cloud infrastructure and secure your servers with my AWS and Linux administration services. Let’s ensure your systems run smoothly. Connect with me now! if you need any guidance or advice related to your Linux VPS.

FAQs

1. Do I need to configure JAVA_HOME after installing Java for Apache Spark?

Yes, setting the JAVA_HOME environment variable ensures Spark can locate Java correctly, especially if multiple Java versions are installed.

2. What is the minimum RAM requirement to run Apache Spark on Rocky Linux 9?

At least 8GB of RAM is recommended for basic Spark functionality to handle data processing efficiently.

3. Can I run Apache Spark without root or sudo privileges?

You can run Spark with user-level permissions, but installation and configuration steps often require sudo access for dependencies and environment setup.

4. Why does Apache Spark require at least 20GB of free disk space?

Spark stores shuffle data, logs, and temporary files locally during processing, so sufficient disk space prevents runtime storage issues.

5. How do I troubleshoot executor lost errors in Spark?

This can be due to network timeouts or unhealthy worker nodes; increasing network timeout and monitoring node health usually helps resolve this issue.

What’s Next

If you’re looking to truly master functional programming with Scala, I highly recommend the Scala & Functional Programming Essentials course by Daniel Ciocîrlan. This course is designed for developers who want to strengthen their programming foundations, write cleaner code, and gain a deep understanding of functional concepts that are widely used in modern software development.

Whether you’re preparing for a professional role or simply aiming to sharpen your skills, this course provides practical, hands-on lessons from an industry expert. Enroll today through this link and take your Scala journey to the next level.

Disclaimer: This post contains affiliate links. If you purchase through these links, I may earn a small commission at no extra cost to you.

Leave a Reply

You must be logged in to post a comment.