Learn how to configure Varnish Cache as a load balancer with our detailed step-by-step guide. Improve your website’s performance and manage traffic efficiently. #centlinux #linux #loadbalancer

Table of Contents

What is Varnish Cache?

Varnish Cache is an HTTP Accelerator designed for content-heavy dynamic websites and APIs. Varnish Cache is usually installed on the same web server, where Varnish Cache acts as the front-end and accelerator for the hosted websites. Varnish Cache is free and open source software which is distributed under two-clause BSD license.

Varnish Cache is an open-source HTTP reverse proxy and caching solution designed to improve the performance, scalability, and reliability of web applications. It sits between a web server and the internet, caching content to reduce load times and increase efficiency for frequently accessed web pages and resources.

Key Features

- Caching Mechanism

- HTTP Reverse Proxy: Varnish acts as an intermediary between clients and web servers, caching responses from the server and serving them directly to clients for subsequent requests.

- Content Caching: Stores static content (like images, CSS, and JavaScript) and dynamic content (like HTML pages) to speed up response times.

- Performance Optimization

- High-Speed Caching: Uses an efficient, in-memory caching system to deliver content quickly and handle high traffic loads.

- HTTP Acceleration: Reduces server load by caching responses and serving them directly to users, decreasing the number of requests to the backend server.

- Load Balancing

- Traffic Distribution: Can be configured as a load balancer to distribute incoming requests across multiple backend servers, improving application performance and availability.

- Failover and Health Checks: Monitors backend servers, rerouting traffic in case of server failures and performing health checks.

- Flexible Configuration

- VCL (Varnish Configuration Language): A powerful scripting language that allows administrators to define caching policies, request handling, and response rules.

- Custom Rules: Create complex rules for caching, such as setting expiration times, defining cache purging policies, and customizing responses.

- Advanced Features

- Content Invalidation: Supports mechanisms for purging or invalidating cached content based on changes to the underlying data.

- Logging and Analytics: Provides detailed logs and analytics to monitor cache performance, traffic patterns, and server health.

- Security Enhancements

- Traffic Filtering: Control and filter incoming requests to prevent abuse and protect against malicious activities.

- Secure Caching: Manage SSL/TLS termination and encryption for secure content delivery.

How Varnish Cache Works

- Client Request

- A client sends an HTTP request to the Varnish Cache server.

- Cache Lookup

- Varnish checks if the requested content is already cached.

- Cache Hit: If the content is in the cache, Varnish serves it directly to the client.

- Cache Miss: If the content is not in the cache, Varnish forwards the request to the backend web server.

- Backend Response

- The backend server processes the request and sends the response back to Varnish.

- Content Caching

- Varnish stores the response in the cache for future requests based on the caching rules defined in VCL.

- Client Response

- Varnish delivers the response to the client. For future requests, Varnish will serve the cached content if available.

Recommended Training: Linux Administration: The Complete Linux Bootcamp in 2025 from Andrei Dumitrescu, Crystal Mind Academy

Use Cases

- Website Performance Optimization

- Speed up page load times for high-traffic websites and applications.

- Scalability Solutions

- Improve the performance of web servers under heavy traffic loads.

- Load Balancing

- Distribute requests across multiple backend servers to enhance reliability and performance.

- Content Delivery

- Cache static and dynamic content for fast and efficient delivery.

- Security Enhancements

- Implement filtering rules and manage SSL/TLS for secure web interactions.

Varnish Cache vs. Other Caching Solutions

| Feature | Varnish Cache | Nginx | Apache Traffic Server |

|---|---|---|---|

| Caching | Advanced in-memory cache | Basic cache capabilities | Built-in caching features |

| Load Balancing | Yes | Yes | Yes |

| Configuration | VCL scripting language | Configuration files | Configuration files |

| Performance | High-performance caching | Good performance for caching | High performance, but complex |

| Flexibility | Highly flexible and customizable | Flexible, but less advanced for complex rules | Advanced features but less flexible |

| Security | Basic security features | Advanced security options | Advanced security options |

Popular Alternatives to Varnish Cache

- Nginx: A popular web server and reverse proxy with caching and load-balancing capabilities.

- Apache Traffic Server: A high-performance caching proxy server from the Apache Software Foundation.

- Squid: A caching proxy for web content that supports HTTP, HTTPS, and FTP.

Conclusion

Varnish Cache is a powerful tool for optimizing web performance, managing high traffic loads, and balancing requests across servers. Its advanced caching capabilities, flexible configuration options, and robust performance make it a preferred choice for many organizations looking to enhance their web infrastructure.

Varnish cache supports multiple back-end hosts, therefore we can also configure Varnish Cache as the Reverse Proxy for load balancing of a cluster of web servers.

NIMO 15.6 IPS-FHD-Laptop, 16GB RAM 1TB SSD Intel Pentium Quad Core N100, Backlit Keyboard Fingerprint (Beat to i3-1115G4 Up to 3.4GHz) Computer for Student-Home WiFi 6 BT5.2 Win 11

$299.99 (as of July 6, 2025 20:48 GMT +00:00 – More infoProduct prices and availability are accurate as of the date/time indicated and are subject to change. Any price and availability information displayed on [relevant Amazon Site(s), as applicable] at the time of purchase will apply to the purchase of this product.)Linux Server Specification

We have configured a CentOS 7 virtual machine with following specifications:

- CPU – 3.4 Ghz (1 Core)

- Memory – 1 GB

- Storage – 20 GB

- Operating System – CentOS 7.7

- Hostname – varnish-cache-01.example.com

- IP Address – 192.168.116.213 /24

Install Apache on CentOS 7

Connect with varnish-cache-01.example.com using ssh as root user.

Build yum cache for standard CentOS 7 repositories.

yum makecache fastUpdate CentOS 7 server packages.

yum updateOutput:

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.ges.net.pk

* extras: mirrors.ges.net.pk

* updates: mirrors.ges.net.pk

No packages marked for update

Our CentOS 7 server is already up-to-date.

Install Apache HTTP server using yum command.

yum install -y httpdStart and enable Apache web service.

systemctl enable --now httpd.serviceAllow HTTP service in CentOS 7 firewall.

firewall-cmd --permanent --add-service=http

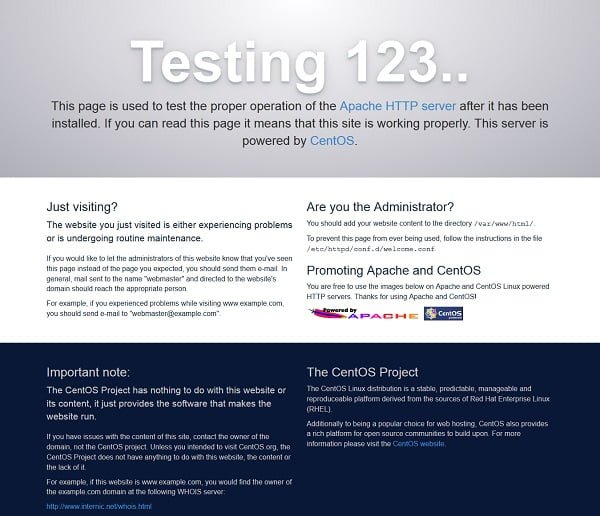

firewall-cmd --reloadBrowse URL http://varnish-cache-01.example.com in a client’s browser.

Apache HTTP server is successfully installed and it is serving the default test page.

Configure Apache Virtual Hosts

We are configure two virtual hosts here, that will run on two different ports.

Create document root directories for virtual hosts.

mkdir /var/www/html/{vhost1,vhost2}Create default index page for Virtual Host 1.

cat > /var/www/html/vhost1/index.html << EOF

<html>

<head><title>Virtual Host1</title></head>

<body><h1>This is the default page of Virtual Host 1...</h1></body>

</html>

EOFSimilarly, create default index page for Virtual Host 2.

cat > /var/www/html/vhost2/index.html << EOF

<html>

<head><title>Virtual Host2</title></head>

<body><h1>This is the default page of Virtual Host 2...</h1></body>

</html>

EOFCreate configuration file for Virtual Host1.

vi /etc/httpd/conf.d/vhost1.confadd following directives therein.

Listen 8081

<VirtualHost *:8081>

DocumentRoot "/var/www/html/vhost1"

ServerName vhost1.example.com

</VirtualHost>Similarly, create configuration file for Virtual Host2.

vi /etc/httpd/conf.d/vhost2.confadd following directives therein.

Listen 8082

<VirtualHost *:8082>

DocumentRoot "/var/www/html/vhost2"

ServerName vhost2.example.com

</VirtualHost>Check Apache configurations for syntax errors.

httpd -tOutput:

Syntax OK

Since, we are running Apache websites on non-default ports, therefore, we have to add these ports to SELinux port labeling.

Check, if these ports are already added in SELinux.

semanage port -l | grep ^http_port_tOutput:

http_port_t tcp 80, 81, 443, 488, 8008, 8009, 8443, 9000

Add ports 8081 and 8082 to type http_port_t SELinux context.

semanage port -m -t http_port_t -p tcp 8081

semanage port -m -t http_port_t -p tcp 8082Verify if these ports are added in SELinux port labeling.

semanage port -l | grep ^http_port_tOutput:

http_port_t tcp 8082, 8081, 80, 81, 443, 488, 8008, 8009, 8443, 9000

Now, we can safely load our Apache configurations.

systemctl reload httpd.serviceAllow 8081/tcp and 8082/tcp service ports in CentOS 7 firewall.

firewall-cmd --permanent --add-port={8081,8082}/tcp

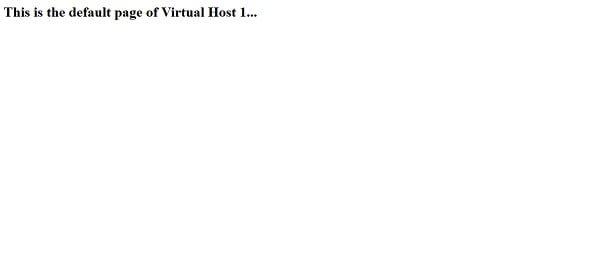

firewall-cmd --reloadOpen URL http://varnish-cache-01.example.com:8081/ in a web browser.

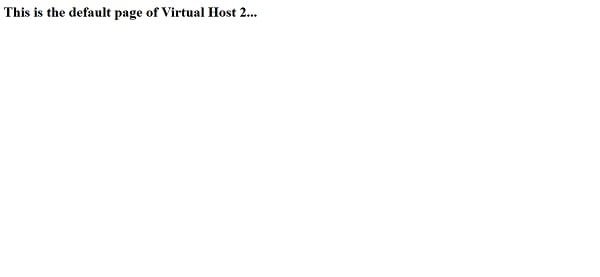

Open URL http://varnish-cache-01.example.com:8082/ in a web browser.

Both of our Apache virtual hosts has been configured successfully.

Gvyugke 2.4GHz Wireless Gaming Headsets for PS5, PS4, PC, Switch – Bluetooth 5.3 Gaming Headphones with Noise Canceling Mic, 7.1 Surround Sound, 70H Battery, Blue Lighting – Black

$33.99 (as of July 6, 2025 20:00 GMT +00:00 – More infoProduct prices and availability are accurate as of the date/time indicated and are subject to change. Any price and availability information displayed on [relevant Amazon Site(s), as applicable] at the time of purchase will apply to the purchase of this product.)Install Varnish Cache on CentOS 7

Varnish Cache software is available in EPEL (Extra Packages for Enterprise Linux) yum repository.

Therefore, first we have to enable EPEL yum repository as follows.

yum install -y epel-releaseBuild cache for EPEL yum repository.

yum makecacheNow, we can install Varnish Cache software using yum command.

yum install -y varnishWe have installed the default version of Varnish Cache that is available in EPEL yum repository. However, you can always download and install a latest version of Varnish Cache from their Official Download Page.

Configure Varnish Cache as Load Balancer

To configure Varnish Cache, we are required to free the port 80 that is currently used by Apache HTTP server.

The directive that controls the service port 80 is defined in /etc/httpd/conf/httpd.conf file.

We can change it using a sed command.

sed -i "s/Listen 80/Listen 8080/" /etc/httpd/conf/httpd.confRestart the Apache service to take changes into effect.

systemctl restart httpd.serviceNow, port 80 is available and we can use it for Vanish Cache service.

Edit Varnish Cache configuration file.

vi /etc/varnish/varnish.paramsLocate and set following directive therein.

VARNISH_LISTEN_PORT=80 #Default Port 6081We have changed the Varnish Cache default port 6081 with 80.

It’s time to configure the backend for Varnish Cache server.

These settings are located in /etc/varnish/default.vcl file. We can easily replace this file with our custom configurations.

Rename the existing default.vcl file using mv command.

mv /etc/varnish/default.vcl /etc/varnish/default.vcl.orgCreate a custom backend configuration file.

vi /etc/varnish/default.vcland add following lines of codes.

vcl 4.0;

import directors; # Load the directors

backend vhost1 {

.host = "192.168.116.213";

.port = "8081";

.probe = {

.url = "/";

.timeout = 1s;

.interval = 5s;

.window = 5;

.threshold = 3;

}

}

backend vhost2 {

.host = "192.168.116.213";

.port = "8082";

.probe = {

.url = "/";

.timeout = 1s;

.interval = 5s;

.window = 5;

.threshold = 3;

}

}

sub vcl_init {

new lb = directors.round_robin(); # Creating a Load Balancer

lb.add_backend(vhost1); # Add Virtual Host 1

lb.add_backend(vhost2); # Add Virtual Host 2

}

sub vcl_recv {

# send all traffic to the lb director:

set req.backend_hint = lb.backend();

}Enable and start Varnish Cache service.

systemctl enable --now varnish.serviceEnable and start Varnish Cache logging service.

systemctl enable --now varnishlog.serviceVerify the backend list using following command.

varnishadm backend.listOutput:

Backend name Refs Admin Probe

vhost1(192.168.116.213,,8081) 1 probe Healthy 5/5

vhost2(192.168.116.213,,8082) 1 probe Healthy 5/5

Check our website’s response header.

curl -I http://varnish-cache-01.example.comHTTP/1.1 200 OK Date: Sun, 13 Oct 2019 16:24:07 GMT Server: Apache/2.4.6 (CentOS) Last-Modified: Sun, 13 Oct 2019 09:35:58 GMT ETag: "7d-594c77a7e0839" Content-Length: 125 Content-Type: text/html; charset=UTF-8 X-Varnish: 32770 Age: 0 Via: 1.1 varnish-v4 Connection: keep-alive

Open URL http://varnish-cache-01.example.com in a web browser.

We have successfully configure Varnish Cache. The load balancer is now redirecting user requests to Virtual Host 1 and Virtual Host 2 in a round robin way.

O guia completo de sobrevivência do Windows 11: Dicas, truques e resolução de problemas para todos os utilizadores (Portuguese Edition)

$25.99 (as of July 7, 2025 20:49 GMT +00:00 – More infoProduct prices and availability are accurate as of the date/time indicated and are subject to change. Any price and availability information displayed on [relevant Amazon Site(s), as applicable] at the time of purchase will apply to the purchase of this product.)Frequently Asked Questions (FAQs)

1. What is Varnish Cache, and why is it used?

Varnish Cache is a high-performance HTTP accelerator designed to speed up web applications by caching frequently requested content. It helps reduce server load, improve website performance, and handle high traffic efficiently.

2. What are the system requirements for installing Varnish on CentOS 7?

To install and configure Varnish on CentOS 7, you need:

- A 64-bit CentOS 7 server

- At least 2GB RAM and 2 CPU cores (recommended for optimal performance)

- Apache or Nginx web server (Varnish acts as a reverse proxy in front of the web server)

- Root or sudo privileges

3. How does Varnish Cache work with web servers like Apache or Nginx?

Varnish acts as an intermediary between the client and the web server. It caches responses from the web server and serves them directly to users, reducing the need for repeated backend processing. This significantly improves response times and reduces server resource usage.

4. How do I test if Varnish is working after configuration?

After configuring Varnish, you can test its functionality by running the following checks:

- Use

curl -I http://your-domain.comand verify theViaheader, which should indicate Varnish is handling requests. - Check the Varnish cache hit/miss ratio using the

varnishstatcommand. - Monitor real-time Varnish logs with

varnishlogto see how requests are being processed.

5. How can I customize Varnish Cache settings for better performance?

Varnish uses the Varnish Configuration Language (VCL) for fine-tuning caching rules. You can modify the default.vcl file to:

- Set custom caching rules for specific URLs.

- Exclude certain pages (like login or checkout pages) from caching.

- Adjust backend timeout settings to improve request handling.

Final Thoughts

Configuring Varnish Cache as a load balancer can significantly enhance your website’s performance and traffic management capabilities. By setting up Varnish Cache correctly, you can distribute incoming requests efficiently, reduce server load, and improve overall site responsiveness.

Need a dependable Linux system administrator? I specialize in managing, optimizing, and securing Linux servers to keep your operations running flawlessly. Check out my services on Fiverr!

Leave a Reply

You must be logged in to post a comment.